The Apple Worldwide Developer Conference (WWDC) 2023 keynote concluded on 5 June with a slew of announcements, including features that fall in the realm of artificial intelligence (AI).

Among the hardware revealed were the highly anticipated 15-inch MacBook Air, Mac Studio, and Mac Pro powered by the M2 chipset. The software releases included the latest iOS 17, iPadOS 17 and watchOS 10 — all of which were expected to be announced at the event.

Of course, the talking point was Apple’s augmented reality (AR) headset named Vision Pro.

“Welcome to the era of spatial computing with Apple Vision Pro. You’ve never seen anything like this before!” tweeted Apple CEO Tim Cook with a video showing the amazing features of the device.

Welcome to the era of spatial computing with Apple Vision Pro. You’ve never seen anything like this before! pic.twitter.com/PEIxKNpXBs

— Tim Cook (@tim_cook) June 5, 2023

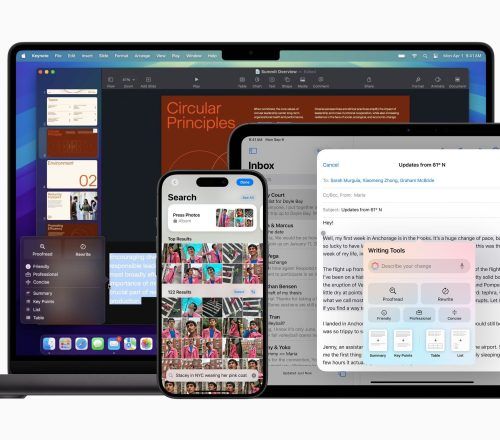

But while the attention was obviously grabbed by Vision Pro, Apple also introduced AI-based features in several products at WWDC 2023.

Interestingly though, Apple didn’t mention “AI” even once in their presentation despite it being the most buzzworthy word as of now given that competitors Google and Microsoft have gone leagues ahead of Apple in that sphere.

Apple’s term was “machine learning” (ML). Though commonly understood as an alternate word for AI, machine learning is technically a subset of the former.

Microsoft defines machine learning as “the process of using mathematical models of data to help a computer learn without direct instruction.” This means that a computer system is able to learn and improvise from its own experience.

Nevertheless, Apple’s stress on machine learning in some of its products was worthy of note because they did mention “transformer language model” during the presentation.

To the uninitiated, a transformer language model, simply put, is a neural network that is capable of learning context from an input and responding in a coherent manner. Google was the first to define it in a 2017 paper. The transformer architecture is behind the most famous AI chatbots, including ChatGPT.

Features where Apple talked about machine learning

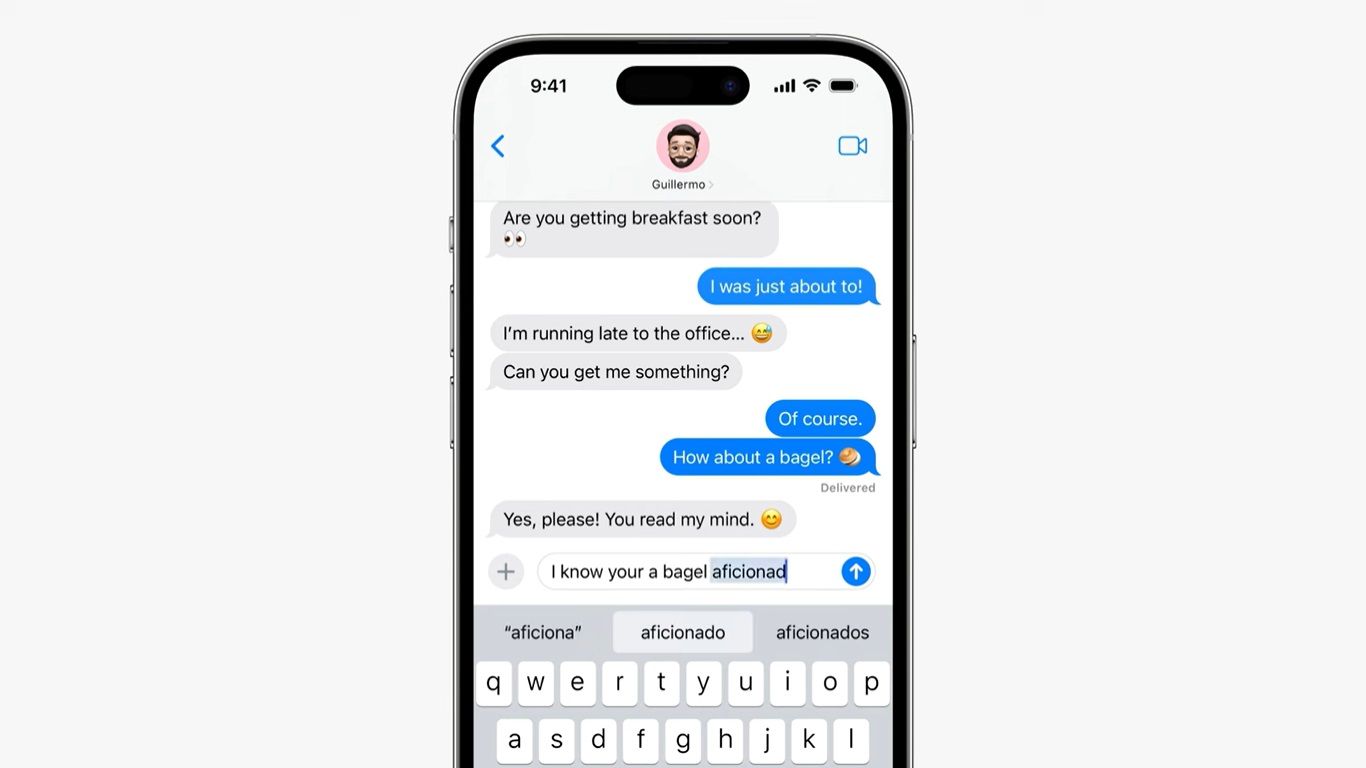

Autocorrect and Diction improvements in iOS17

Craig Federighi, the senior vice president (SVP) of Software Engineering at Apple, mentioned both machine learning and transformer language model when talking about the new dictation and autocorrect features during the iOS 17 presentation at WWDC 2023.

“Autocorrect is powered by on-device machine learning, and over the years, we’ve continued to advance these models. The keyboard now leverages a transformer language model, which is state of the art for word prediction, making autocorrect more accurate than ever,” he said.

Federighi added that Apple Silicon will enable the iPhone to run this model every time users tap a key.

Apple said in a statement that the transformer language model will improve user experience and accuracy every time they type. The company said that inline predictive text recommendations will appear as users type and complete sentences can be inserted by tapping the spacebar.

The autocorrect feature works on sentence-level too, meaning that the machine will be able to suggest entire sentences when messages are typed. The feature will also be able to learn about the typing habit of the user, including intentional misspellings and “ducking word,” which is a term for swear words, and will be able to suggest words or phrases accordingly.

Federighi also said that the Dictation feature gets a “Transformer-based speech recognition model that leverages the Neural Engine” for more accuracy.

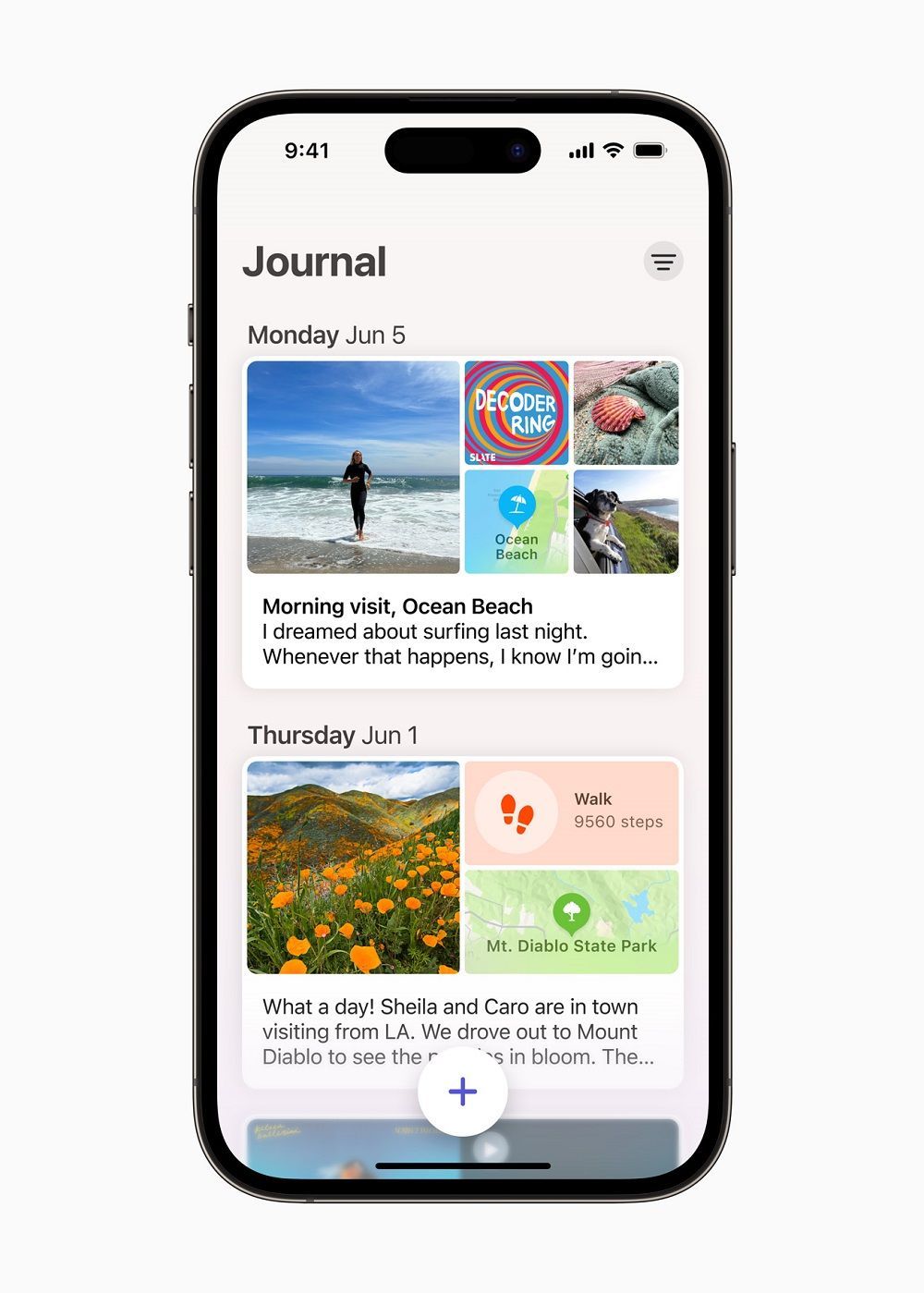

Journal — a new app for journaling on iOS 17

Immediately after the autocorrect and diction announcement by Federighi, Adeeti Ullal, senior manager, Sensing and Connectivity at Apple, introduced a new app called Journal.

The app was one of the most talked about features following the event. It is essentially a journaling app which lets users keep a record of their moments and memories as a means of improving well-being.

Expected to roll out in September 2023, Apple said in a statement that the app uses “on-device machine learning” to make personalised suggestions.

“Suggestions will be intelligently curated from information on your iPhone, like your photos, location, music, workouts, and more,” Ullal said.

Apple said that even the company cannot access a user’s Journal entries, thereby ensuring one of the highest levels of privacy and end-to-end encryption.

Developers will be able to use the app’s API to add their journaling suggestions to their apps.

Persona in Vision Pro

Persona is a unique feature that Apple introduced with its Vision Pro at WWDC 2023. The cameras on the AR device can scan a user’s face to create a lifelike likeness, which is then projected to other users during video calls or FaceTime interactions.

It is like a digital clone of the real user, with others on the video conference getting to see the avatar making the exact gestures and expressions that the real life user would do and without the Vision Pro over their heads.

In its presentation, Apple said that Persona has been “created using Apple’s most advanced ML techniques.”

Other features with Apple machine learning

Apart from the iOS 17 features and the new Journal app, Apple also mentioned machine learning when talking about PDF features in iPadOS, lock screen in iPad devices, and Smart Stack widget in Apple Watch.

The Photos app now also uses an improved ML programme to identify the user’s pets and can identify the user’s cats or dogs from other cats and dogs.

Apple announced that AirPods Pro now “uses ML to understand environmental conditions and listening preferences over time” for its Adaptive Audio feature. It can also automatically adjust volume or turn off the noise cancellation feature by sensing the user’s action and environment.

(Hero and Featured images: Apple)

This story first appeared here.